Computing infrastructure and data analysis software is of particular importance to the current and future productivity of the APS. Demands for increased computing at the APS are driven by new scientific opportunities often enabled by technological advances in detectors, as well as advances in data analysis algorithms. These advances generate larger amounts of data, which in turn require more computing power in order to obtain near real-time results.

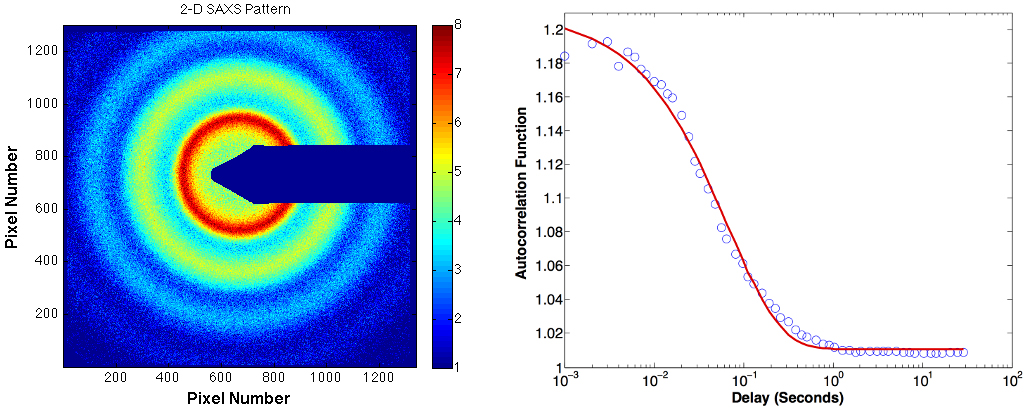

An example where advances in computation are critical is found in the X-ray Photon Correlation Spectroscopy (XPCS) technique (Fig. 1). XPCS is a unique tool to study the dynamic nature of complex materials from micrometer to atomic length scales, and time scales ranging from seconds to, at present, milliseconds. The recent development and application of higher-frequency detectors allows the investigation of faster dynamic processes enabling novel science in a wide range of areas such as soft and hard matter physics, biology, and life sciences. A consequence of XPCS detector advancements is the creation of greater amounts of image data that must be processed within the time it takes to collect the next data set in order to guide the experiment. Parallel computational and algorithmic techniques and high-performance computing (HPC) resources are required to handle this increase in data.In order to realize this, the APS has teamed with the Computing, Environment, and Life Sciences (CELS) directorate to use Magellan, a virtualized computing resource located in the Theory and Computer Science (TCS) building. Virtual computing environments separate physical hardware resources from the software running on them, isolating an application from the physical platform. The use of this remote virtualized computing affords the APS many benefits. Magellan’s virtualized environment allows the APS to install, configure, and update its Hadoop-based XPCS reduction software easily and without interfering with other users on the system. Its scalability allows the APS to provision more computing resources when larger data sets are collected, and release those resources for others to use when not required. Further, the underlying hardware is supported and maintained by professional HPC engineers in CELS, relieving APS staff of this burden.

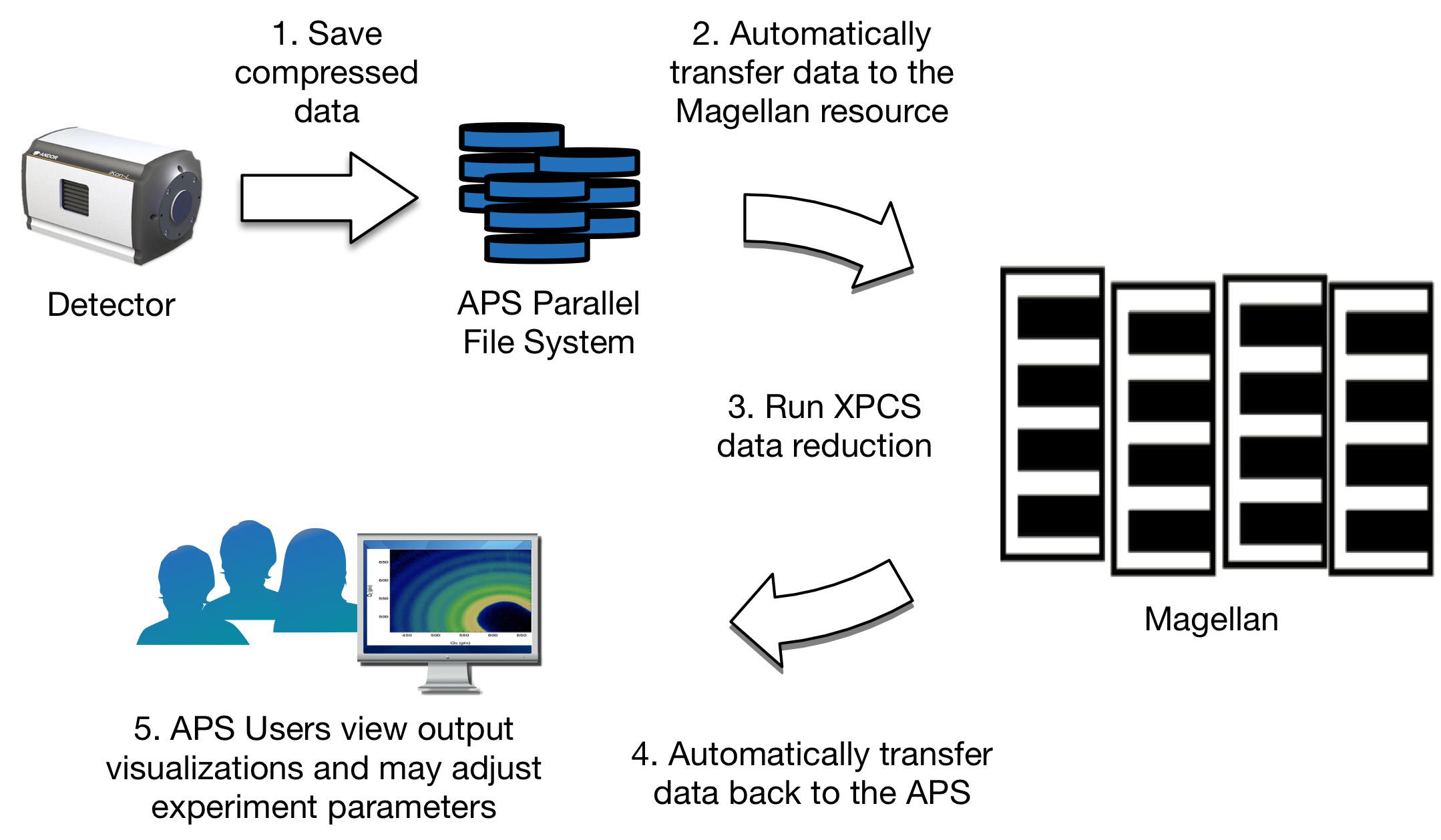

The XPCS workflow starts with raw data (120 MB/s) streaming directly from the detector, through an on-the-fly firmware discriminator to a compressed file on the parallel file system located at the APS. Once the acquisition is complete, the data is automatically transferred as a structured HDF5 file using GridFTP to the Hadoop Distributed File System (HDFS) running on the Magellan resource in the TCS building. This transfer occurs over two dedicated 10 Gbps fiber optic links between the APS and the TCS building’s computer room. By bypassing intermediate firewalls, this dedicated connection provides a very low latency, high-performance data pipe between the two facilities. Immediately after the transfer, the Hadoop MapReduce-based multi-tau data reduction algorithm is run in parallel on the provisioned Magellan compute instances, followed by Python-based error-fitting code. Magellan resources provisioned for typical use by the XPCS application includes approximately 120 CPU cores, 500 GB of distributed RAM, and 20 TB of distributed disk storage. Provenance information and the resultant reduced data are added to the original HDF5 file, which is automatically transferred back to the APS. Finally, the workflow pipeline triggers software for visualizing the data (Fig. 2).

The whole process is completed shortly after data acquisition, typically in less than one minute - a significant improvement over previous setups. The faster turnaround time helps scientists make time-critical, near real-time adjustments to experiments, enabling greater scientific discovery. This virtualized system has been in production use at the APS 8-ID-I beamline during the 2015-3 run. It performs over 50 times faster than a serial implementation.Nicholas Schwarz [email protected], Suresh Narayanan [email protected], Alec Sandy [email protected], Faisal Khan [email protected], Collin Schmitz [email protected], Benjamin Pausma [email protected], Ryan Aydelott [email protected], and Daniel Murphy-Olson [email protected]

The XPCS computing system was developed, and is supported and maintained by the XSD Scientific Software Engineering & Data Management group (XSD-SDM) in collaboration with Suresh Narayanan (XSD-TRR) and Tim Madden (XSD-DET) with funding from U.S. Department of Energy (DOE) Office of Science under Contract No. DE-AC02-06CH11357. The Magellan virtualized cloud-computing resource is supported by the Computing, Environment, and Life Sciences (CELS) directorate with funding from the DOE Office of Science.

Argonne National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.